It can browse but it's not a browser

Climbing the ladder of abstraction

There was a joke a few years ago about how all products would get “stories” after Instagram added them, then Facebook, then Twitter (remember fleets?). Before that there was a joke about how all productivity software expands until it contains a messaging app. With the advent of LLMs and agents, I suspect that almost every productivity software will grow to contain some kind of browsing ability — or at least a way to fetch data from the web without switching to another app.

This movement of gradual abstraction of custom features is quite common in computing history. In our latest issue about feature creep we’ve talked about how spreadsheets software originally did not have the ability to generate graphs.

Right now the browser is also changing profoundly. Safari in iOs 18 and macOS Sequoia will contain a new feature where the browser will abstract the core informations from the webpage currently in focus.

At Kosmik we think that this movement is only the start of a broader movement where the OS will gradually unbundle the browser. LLMs are a seismic shift in the way we think about productivity software and they will only accelerate trends that have been underway for the last 10 years:

The shift to mobile.

The shift towards web technologies.

The shift towards cloud-based software.

At Kosmik we’re focused on making a creative-first, privacy-centric version of that future. If you’d like to learn more about how we think about those transformations you can read those two issues:

The latest version of Kosmik (Kosmik SU-1) released 10 days ago is a prime example of which workflows we want to build for our users: We tear down the walls between your browser, your assets manager and yet another app you may use to share those assets. By integrating those three parts together in a coherent and comprehensive tool, we shorten the feedback loop and increase productivity. It is also — in our opinion — a nicer way to work rather than endlessly switching apps!

Now that this base is firmly in place in our product we’re starting to work on an LLM-boosted Kosmik (with local models for most of the features). We cannot — yet — show you what we’ve got in store (there are small demos on our Youtube channel 👀) but here’s how we think LLMs are going to climb the ladder of abstraction.

Climbing the ladder of abstraction - the example of games in the 1980’s.

Games are a great way to foresee how UI might evolve. They manage complexity, representing thousands of combinations through just a few buttons or button combinations. And they always leverage new devices and hardware.

As computers got better, games climbed the ladder of abstraction, let's look at some examples from 1980 until 1988 when each new generation of micro-computers were bringing massive changes (colours, graphics, double the memory, 10x more CPU).

Zork I - 1980:

This is where we are with AI currently: you prompt and the program react. You have to grope your way along, trying several combinations of words and commands to get the result you want.

Ultima I - 1981:

Visual feedback! You have a map, you move a character, the game gives you instructions through the "prompt" box where you can enter command and get a log of what you did. Semi textual / graphical manipulation. This is almost where we are with iPadOS 18 notes and calculator features.

Between 1983 and 1985, graphical user interfaces, mouses and indirect manipulation gradually made their way into home computers (at the high-end of the market). 3 years after that with the advent of 16 bits micro-computers games UI had changed completely.

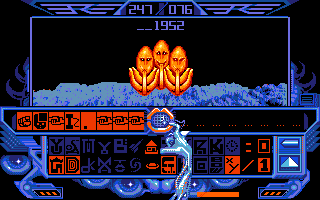

Captain Blood - 1988:

The commands are abstracted behind icons. The user can choose and combine presets. What is lost in "open ended ness" is compensated by a much faster feedback loop, and ease of use. Instead of prompting the user creates a pipeline of commands.

I believe this will be the most popular form of "assistants" → it looks more like scripting than coding.

First generation: keyboard command, no "pointing", black and white screens. Sometimes no graphics at all beyond some proto ASCII.

Second generation: Keyboard command with graphic feedback, graphics.

Third generation: Mouse and keyboard, proper GUI, high res graphics, short feedback loop.

Integrated, open-ended software

Gradually we will climb the ladder of abstractions in productivity software too. It is not yet clear wether the agents should be “incarnated” as companions within the UI, personified with a cursor, or omniscient being to which you address some prayers through a prompting box or crop circles drawn on screen. The goal is to NOT have to describe what’s on screen, to NOT have to copy-paste in a box. The goal is to point at things and initiate a dialog with the machine to create that pipeline of actions that will refine that text, make that research paper more comprehensive, or add context to this presentation.

We’re welcoming back integrated software, and we’re introducing open-ended productivity software! We’re very excited to share the first results of those explorations soon!

Until next week -

Paul 🧑🚀